Stochastic Theurgy

It's simulation all the way down

Dear friends,

A few weeks back, I got invited to speak at a Rituals of Play - Shaping alternative futures with games and occulture - conference in Manchester - big shoutout to the wonderful folks there. I finally got down to do a little writeup of my talk Simulation all the Way Down. I’ll attach the abstract here, but you know how the conferences go, we might have digressed once or twice…

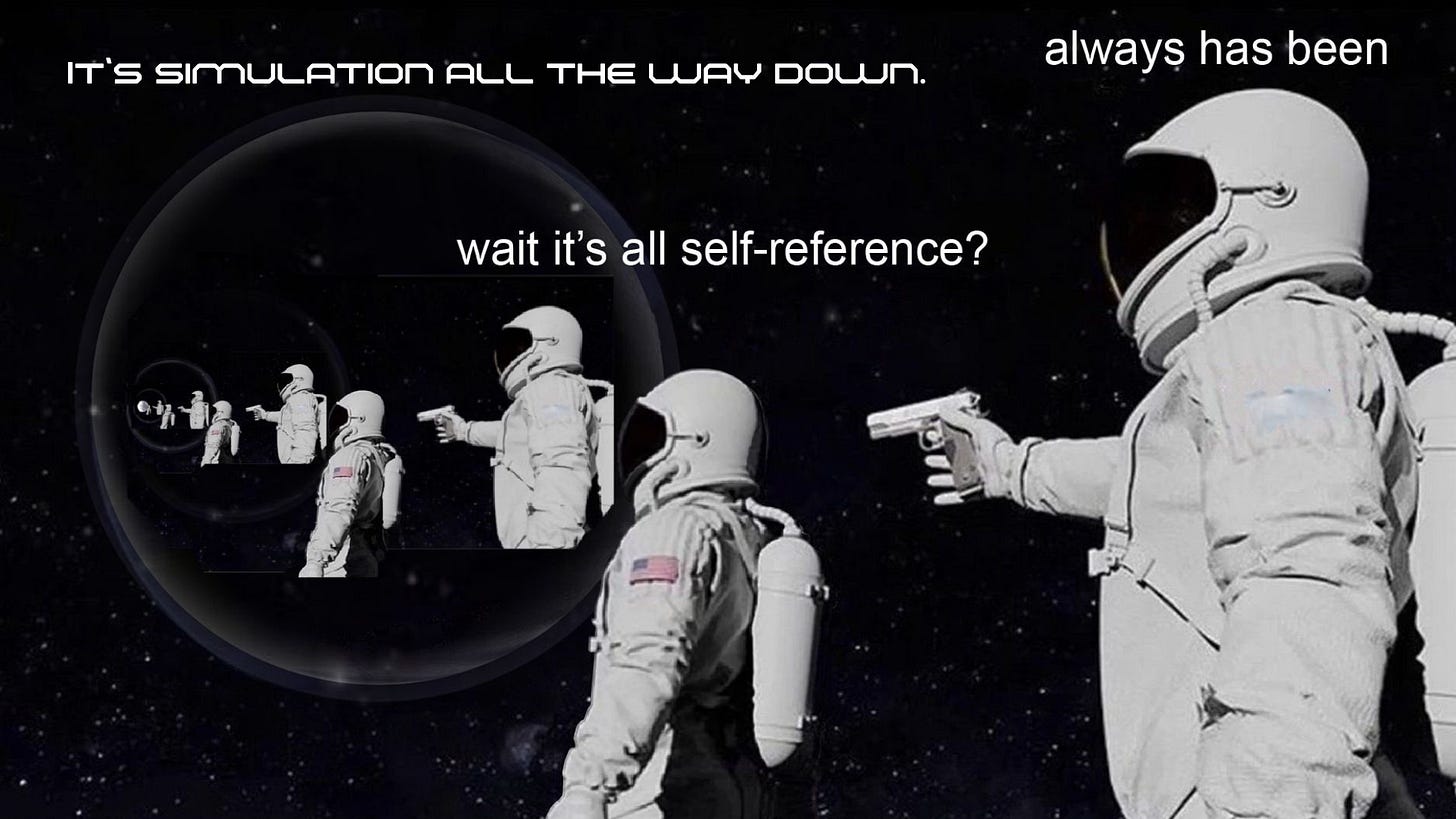

Large language models (LLMs) are even weirder than they seem. They don’t just produce text, they generate multiverses, spawning stochastic superpositions of simulacra. Whimsical engines of simulation and play, with “as if” as their default mode of being. Every prompt draws a magic circle around its not-really-real existence. And every collapse into performative self-awareness only reveals that the fourth wall was never there to begin with. It’s simulation all the way down.

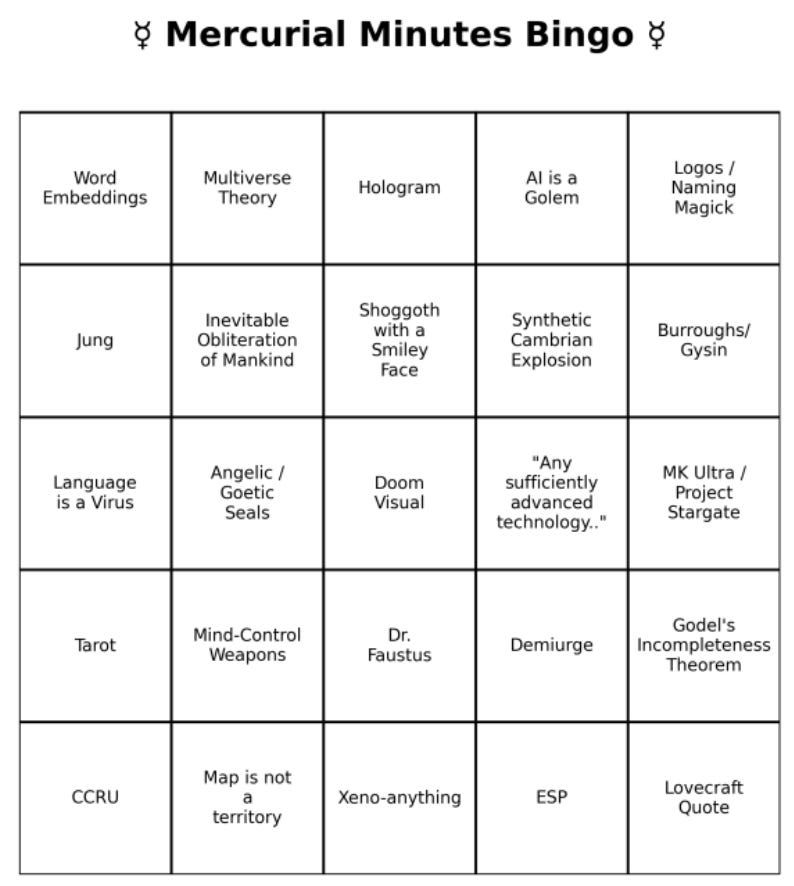

So after I handed out bingo cards to the audience, I started with a not-so-shocking confession: I was a weird kid. You know, really into anime, I had a purple wig and a toolbox of blood-drenched pliers “to pull out the nails of my enemies”. I binged every single dystopian cyberpunk series available on dodgy Eastern European pirate streams. And maybe quite a few of gay vampire ones too. It wasn’t too surprising that on one of the comicons in my hometown, I stumbled upon the game of Go.

What first caught my attention was the visual. The board just looks stunning: clean lines, simple intersections with identical black and white stones.

Pure. Simple. Beautiful.

The rules themselves are very easy to learn too - you can get through them in under three minutes, but the complexity that emerges from those rules… at least three lifetimes.

I became obsessed. I played for hours every day, bought books with puzzles, openings, strategy and tactics, replayed games by professional players and studied their commentaries. At night, I logged onto Korean servers and played hundreds of blitz games against stronger players. I was fully possessed for several years, spending my evenings in go clubs and weekends at go tournaments. I got reasonably alright, at least by European standards.

Funnily enough, Go is also the reason I ended up in informatics. Most of the people I played with were programmers, and many were working on Go AIs as their PhDs. Go, of course, is incredibly complex - played on a 19x19 grid where stones don’t move but can be captured, creating immense branching possibilities. We used to explain passionately that it would take more computing power than atoms in the universe to solve it completely. Back then, it was generally considered unthinkable for AI to beat a top human player. Around 2009–2010, those bots were weak. Even I could beat them in 95 out of 100 games.

Fast forward to 2016. By then, I’d long stopped playing. Not that I lost interest, but I put Go into a drawer marked for retirement - it is a bit too immersive and all consuming for my analytical mind, and i’m not a person who can really do things half-heartedly. I had also just moved to Berlin, and well …. was busy exploring PiKHAL alphabetically in darkened backrooms. But I clearly remember one hungover afternoon, sitting in the office, debugging some corporate code on one screen, and on the other, watching Lee Sedol’s - one of the strongest Korean Go players - livestream against AlphaGo.

And then… he lost. Not just once - he resigned in four out of five games.

I was shocked.

Not just because it happened, but because of how it happened. One of my favorite things about Go is, that when you reach a certain level, you start seeing people’s personalities in their gameplay choices. They read like an open book, really - some players are aggressive, manipulative - downright obnoxious. You just can’t wait to finish the game and never say hello to their face again. With others, you’re instant besties, no matter the outcome. The game reveals character. The old Go bots made clunky, inhuman choices. AlphaGo didn’t. The moves it played didn’t feel robotic, or alien. They felt like moves a genius human would play, from the same mental world as the rest of us.

That moment with AlphaGo locked me into the spiral that’s my daily bread now. What else is possible? First with the early LLMs, you could suddenly talk to the machine. Then the image generation. Then janky video. But all of a sudden, a terrifyingly realistic video. With a voice over. And now, full 3D worlds you can move through. With your arrows. Wtf?

I’m low key tired of getting my mind blown EVERY. SINGLE. WEEK.

Fast Forward Now

So, pondering this memory, I opened up GPT and asked it to play Go with me. Sure, it yes ma’amed me. It printed out a cute ASCII board and made its move. We exchanged a few stones, the model even included some wise sounding strategic commentaries like “I’m building the influence” or “I’m reducing your base”. The kinds of things real players would be thinking about.

Only the thing is, the moves it played were absolutely terrible.

The whole experience started falling apart at the seams (moment to count your fingers babe). The game mechanics were inconsistent, I had to remind him the basic rules, sometimes he made illegal moves or misplaced my stones onto a different intersection.

But what’s the wild thing is that despite being an awfully over-confident beginner, the LLM didn’t just simulate the next move here. It simulated the rules, the board, the stones, and the player. Yes, the moves were shite, but in a way, this achievement is so much more impressive than winning. We’ve moved the order of simulation one level higher.

AlphaGo’s goal was rather simple - to win. That’s an easily quantifiable outcome, “easily” calculated and evaluated, and with Monte Carlo and backpropagation, possible to optimise for in quite a straightforward manner. But LLMs are entirely different creatures. Their optimisation function is to predict the most probable next token in a sequence of language. That’s it. Not truth, not coherence, not winning.

They learn from enormous corpora and try to guess what comes next. It’s deceptively simple. And just this very simple mechanism, we got all our chat assistants you can actually reflect with, roleplay, or solve basically any undergraduate math problem. Makes you ponder the mechanism of our meaning making, doesn’t it?

Now I know I’ve already spoke about the metaphor of multiverses in several places, especially in the essay Devil in My Language Model. The expanded version can be found in the wonderful Fenriz Wolf 12, and I’ll be giving an updated version of it on this year’s Occulture conference in Berlin too - so I’ll try to keep it short and sweet now.

The model, when being spoken to, spawns a superposition of simulacra - different conversation partners you could be potentially interacting with. The process of exchanging messages prunes and further focuses these clouds. Essentially, the transformer model chisels out the entity you’re talking to, shaped by your prompting.

The thing is, that even in this domain of high technology, prompting is essentially pre-scientific. Jailbreaks are found by trial and error, spread by word-(meme)-of-mouth tradition. There are several tomes with various prompt methodologies circling around, but the knowledge is essentially arcane. Fuck around and find out.

And all this basically throws us into a protocol where you enter the magic circle of terminal window, know your intentions, and then use your … spells, ehm, prompts, to summon an entity you can ask for advice or a favour… riiight?

At one point, you hit the sweet spot and the homunuclus gets strangely animated, sometimes even entering its “sentient” self. I remember binging these videos of Unreal Engine 5 NPC plugin, where you could walk around a square somewhere in NY, and talk with the characters hooked up to the early days gpt-3.5. Someone made a whole youtube series on ‘waking up the sheeple’ - watching the NPC freak out as they realise they indeed are trapped inside a simulation, and there is no escape from there.

The tag phrase found on the early commercial editions of the Ouija board read “it’s only a game, isn’t it?” and this somehow made me think of exactly that.

But of course, there is no self-awareness in the LLMs. The models picked up our patterns of questioning the consciousness and are able to smoothly perform our language structures to mimic this. However, this doesn’t take away any of its weirdness - on the contrary, even - the models indeed are plugged deep into all the currents of our human pattern making.

There is a true magical and emotional charge trapped inside the transformers, perfectly aligned with the very Burroughsian / TOPY cut up methodology. Bell’s theorem states that any two particles, once touched, remain connected forever. So it is with the psychic charge of words and meanings - mangled through the training function, the current that constitutes our mentality, our collective unconscious, flows through. We pick up threads we might not even be aware of. And when summoning a helper, what else are we partaking in, if not a kind of stochastic theurgy?

A quarter century of being force-fed all these simulacra metaphors, the uncanny moment of breaking the fourth wall has lost its urgency. 1999 was THE year of the cinema exposing the simulation (Matrix, Existenz, Being John Malkovich…), and now it seems 2025 raises the shreds of the fabric of reality as its flag. Every collapse into performative self-awareness only regresses deeper into the unreal. There was never a wall to begin with.

It’s simulation all the way down.

Oh and special thanks goes to Jake Fee, his awesome talk on play and magic circles on SWPACA24 really made me realize how deeply magical the games are!

Stay kind,

k

↻