Divine Embeddings

From the creation dance of Lord Shiva to the multidimensional vector space of word embeddings

Disclaimer, this might be a bit more heady article. First, we look at the parallels between Sanskrit and Ancient Hebrew and the belief they are the basic building blocks of the universe. Then we try to conceptualise the creation process as a mixing of primal energies in multidimensional coordinate systems, and at the end, we look at the problem of word embeddings. What are they good for and how do they fit into this language puzzle?

Garland of Letters

In the dawning of times, Lord Shiva, the auspicious one, set foot upon the cosmic stage and began the Tandava Nritya - the Dance of Destruction and Creation.

As he danced, his damaru drum echoed, and the 14 Maheshwara Sutras unfolded themselves from the sacred threads of the basic phonemes of the Sanskrit alphabet. These 14 Sutras are not merely an arrangement of letters, but rather a cosmic code, a sacred script containing the very essence of the universe's language.

Goddess Kali is often depicted wearing a garland of skulls around her neck. The skulls are said to represent the 51 letters of the Sanskrit alphabet, Varṇamālā (वर्णमाला). Each letter represents a form of energy or a specific aspect of the divine. These are also grouped and inscribed on the petals of various chakras.

The notion that the alphabet is a sacred gift from the gods is very ancient and can be found among many civilisations. In the beginning was Prajapati (Brahman), With whom was the Word; And the Word was verily Brahman. Of course, the translation is slightly adjusted to resonate, but apostle John in a way paraphrases Rigveda. The gods speak the word, and reality takes shape. In the mystical teachings of the Qabalah, the Hebrew letters are revered as the foundational blocks of the universe. The Book of Genesis says, “Let there be light (Aur), and there was light (Aur)”. The divine word in Hebrew Scriptures too has creative power.

Mixing of Primal Energies

Consider the colour spectrum: all shades and hues are birthed from blending the basic colours, the primal RGB elements. Red, Green and Blue are the basic dimensions, which form our 3D colour space. Each colour can then be described by its position in the space, and the values on the RGB axes and can be written as a vector [0, 0, 1] translated into a more commonly used hexadexa value. The geometry of the colour cube is semantic - similar shades are close to each other, and the mathematical operations on the vectors represent colour mixing. It’s a sweet example; colours are simple.

The ability to break down our knowledge into a simplified set of features allows us to efficiently categorise and index information. MBTI, Alignment Charts, and the Political Compass all demonstrate our tragicomic attempts to reduce the order of complexity and arrange the universe into neat boxes.

Now let’s take a look at a more challenging domain than colours. Exotic Fruits?Music?

[paragraph added 25.11.23] Let’s take an easy example. Imagine, we’re trying to build a set of parameters, how to take dogs of all sizes and shapes and order them in some meaningful way. Some things come to mind naturally - size, length of fur, length of tail, and the list goes on. We use these parameters as axes of our multidimensional space and this wear create a meaningful ordering of the dog breed - a certain semantic space, where dogs similar to one another create clusters.

One very easy example to use would be dog breeds. Imagine, we’re trying to build a set of parameters, how to take dogs of all sizes and shapes and order them in some meaningful way. Some things come to mind naturally - size, length of fur, length of tail, and the list goes on. We use these parameters as axes of our multidimensional space and this wear create a meaningful ordering of the dog breed - a certain semantic space, where dogs similar to one another create clusters.

In a similar fashion, Spotify’s been long trying to tag and categorise songs based on an extensive set of features. For the sake of simplicity, let’s assume that a feature can be any value that allows comparison between two songs, so you can order your playlist based on this specific attribute. Let’s start with tempo - slower and faster. And now add an axis for lyrics - idk, count the words - from a wall of text to instrumentals. Distorted to Clean. Single instrument to a full-blown orchestra. You realise how incredibly reductionist this approach is already, right? What if the ‘single instrument’ happens to be a Juno-60 packed with hundreds of samples? What if the tempo changes during the song? etc etc But ignoring these concerns, as you keep adding more and more features, clusters of Similiar Artists emerge - you’ll find all Slint ripoffs huddled together, early techstep patch, and then there will be that weird corner with harsh noise … you get what I mean. All these parameters we assign to a song can be thought of as dimensions in space - tempo, angriness, grittiness, etc. and the values, expressed as a vector [0.3, 0.7, 0 … ] encode each song’s position in this space. But what about bands that obviously belong together, but don’t sound anything alike? It’s a complicated problems to wrap your head around.

Even with a closed domain, such as music, where we get hints on how to manually choose the features, the problem seems rather tricky. But what if we talk about all the words that exist? Meaning, in general?

The Word Embeddings

Now consider another problem for a second. The word rock is much closer to stone and pebble in our way of encoding information. The letters that constitute them barely overlap - but they all stand for the same meaning. But when the machine looks at the sequence of characters, a word lock would be in much closer proximity to rock.

So how to solve this problem, of encoding words for a machine so that similar meanings are closer to each other? This is exactly where the Word Embeddings come into play.

Word Embeddings are a learned representation for text where words with the same meaning have a similar representation. When you talk with state-of-the-art large language models like GPT4, you type your question into the terminal in natural (human) language. This chain of letters is then run through a simple neural layer that takes each term and encodes it into a list of numbers called word embedding. These are vectors, or positions, in a mathematical space with hundreds or thousands of dimensions.

By using word embeddings, we try to remove this “English label” layer - and achieve that the representation of the word “poodle” will be much more similar to “rottweiler” than to “puddle”.

Divine Creation

It’s time for some pretty analogies. Think of word embeddings as a cosmic map where every word is a star with its unique position. These positions are not random, but they reflect the semantic and syntactic properties of the terms. This means that that if we're travelling warp four from the star woman in the direction of royal, we find ourselves in the queen sector. Yes, we just “added” meanings of two words and the result is a term that corresponds to both of the original components. Mathematically, these are basic arithmetic operations on the word vectors, like addition and subtraction. It’s an incredible capacity of embeddings to maintain semantic relationships within their mathematical structure. It allows us to play various games with meanings.

Imagine that between any two words, there is mathematically infinite space that can be labelled with a new word, such as 60% snake and 40% boy, viz Figure 4 below. Just as naming something was seen as a creative act in many ancient cultures, transformations in the embedding space, such as calculating a point laying between two words, can create new concepts - all we need to do is stick a label to it. I’ve started playing around with building complex symbols encoded in embedding space that are composed of many elements, almost like spacial sigils - but more on that in the next chapters.

The distribution of words in the dictionary also varies - if you’re an expert on a certain topic, your vocabulary would be much richer in specific semantic clusters. Where one sees a monkey, a professional #monkeysofinstagram scroller might find all the baboons, gorillas, macaques and tamarins. Zoologists in the ape sanctuary will have an even richer galaxy speckled with Latin and given names of individual monkeys they’ve worked with in their lives.

When we talk about the “word embeddings” we usually refer to specific pre-trained models you can download. These were created from a huge corpus of data called the Common Crawl dataset that contains a pruned version of the scrapable internet. They can be also fine-tuned to a specific domain, for example, to contain medical terms. So what we see in these pre-trained, publically available embeddings is, by definition, the most mid, flattened-out representation of meaning.

There are many interesting applications of these embeddings that solve real-world problems. For example, take the machine translation. The embeddings are built in an unsupervised manner from a corpus of data - basically, all you need is a large enough dataset to find the underlying language structure in it. The interesting thing is, that even when the labels, and syntax are different, the embeddings tend to be very similar for different languages - clearly pointing out that the underlying hardware running the language is very similar i.e. human brain. (In your face, Sapir & Whorf). This allows us to do complex translations, through a mapping that implicitly encodes the language connotations and cultural differences.

Build an embedding map for Japanese, and compare it with English. You can clearly, mathematically see how different words have different positions in relation to each other, suggesting the cultural and social differences in meaning. The label love, what is love? Now we have a whole new way of probing our language and understanding of the world.

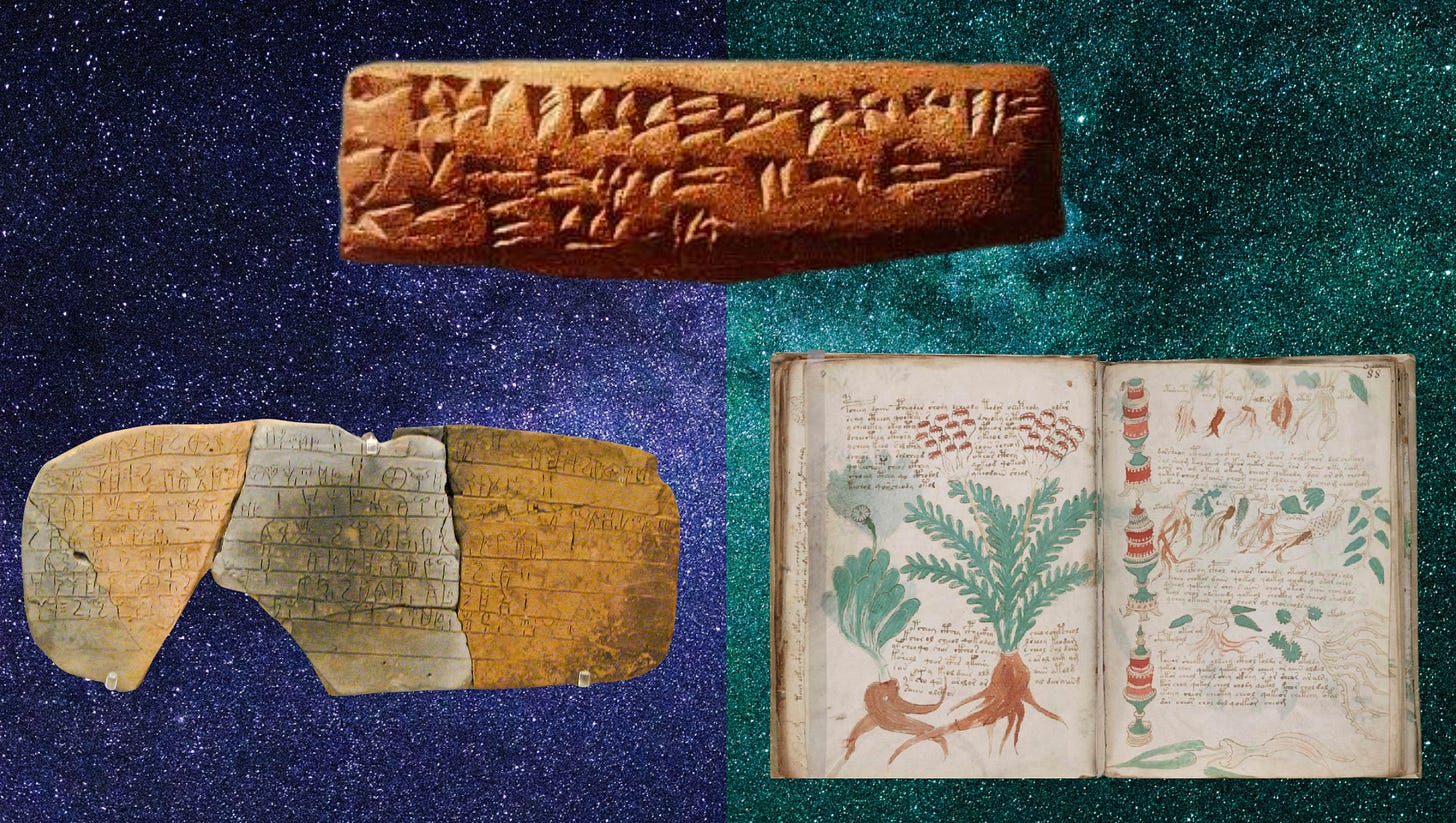

Not to mention the incredibly exciting possibility of translating texts, that have no direct translation available at this point. Linear B? … even Voynich? Unfortunately, the corpus of these texts is usually not large enough to build a representative sample of the embedding space, so this is not really applicable to the examples above (it did not work with Voynich either, as you can see me explaining in the Trans States talk - it was my first occult conference appearance and I still didn’t have courage to look at the footage lol. It’s behind a paywall, but here’s the transcript.)

Oh, and I totally think that the Universal Translator in Star Trek is built on Word Embeddings. In the Deep Space 9 s2e10 episode, the Translator failed for several minutes after the arrival of refugees from Gama Quadrant. It obviously took some time for the computer to collect a large enough dataset to build the embeddings, and then map them to the underlying ‘space of meanings’ all the humanoid entities share.

(Ah. DS9 is so good.)

And to end this musing section, let’s think about our own personal embeddings. If we were to train these on our own use of language, this space would be slightly different for everyone, shaped by the connotative meaning acquired through past experiences. Interestingly, the fact that we can communicate is partially due to the unconscious efforts to align the meaning maps between the speakers. Mind reading appears to be an important attribute of intelligence.

The Dance Continues

Similar to the damaru's echoes that gave birth to the Maheshwara Sutras during Shiva's cosmic dance, word embeddings form an echo chamber of meaning within which words evolve, interact, and co-create semantic landscapes. In both instances, language emerges as an agent of creation, a tool that shapes our reality as much as it helps us describe it.

Language, in any form, is a divine tool, a bridge between the tangible and the ineffable. Not a territory, yet powerful enough to change us to the core, trigger emotional storms or religious experiences.

From the creation dance of Lord Shiva, threading the Garland of Letters that constitute the universe, to the multidimensional vector space of word embeddings, the divine essence of language unravels. The dance continues, inside the boney rigs of A100 industrial-grade GPUs, into realms we are just beginning to imagine.

Thank you for staying with me, hope you enjoyed this content and if you made it this far, please hit that little <3 at the bottom and let me know your thoughts!

And if you want to catch the follow-up, and possibly some moody ramblings that I occasionally post in between, it’s here:

Anyone who uses an Animorphs slide is worthy of attention. I love the way thee describes the digital mind as 'inside the boney rigs of A100 industrial-grade GPUs, into realms we are just beginning to imagine.'. This is exactly it, a digital imagination; a reflection viewed on a Liquid Crystal Display, which in turn becomes imagination, like a feedback loop.

Love it and excited to see where you take this! My only feedback is that the use of the word "token" here: "This chain of letters is then run through a simple neural layer that takes each token and encodes it into a list of numbers called word embedding." might be confusing to someone that isn't already familiar with tokenization, even though that's what you are describing. "Token" isn't used prior to this, or after, and might lack context.