Voynich Manuscript

Crash course in computational linguistic, alien scripts and medieval hoaxes

If you bear with me, you might find out more about the Voynich manuscript, computational linguistics and the machine learning attempts to decipher this 500-year-old mystery.

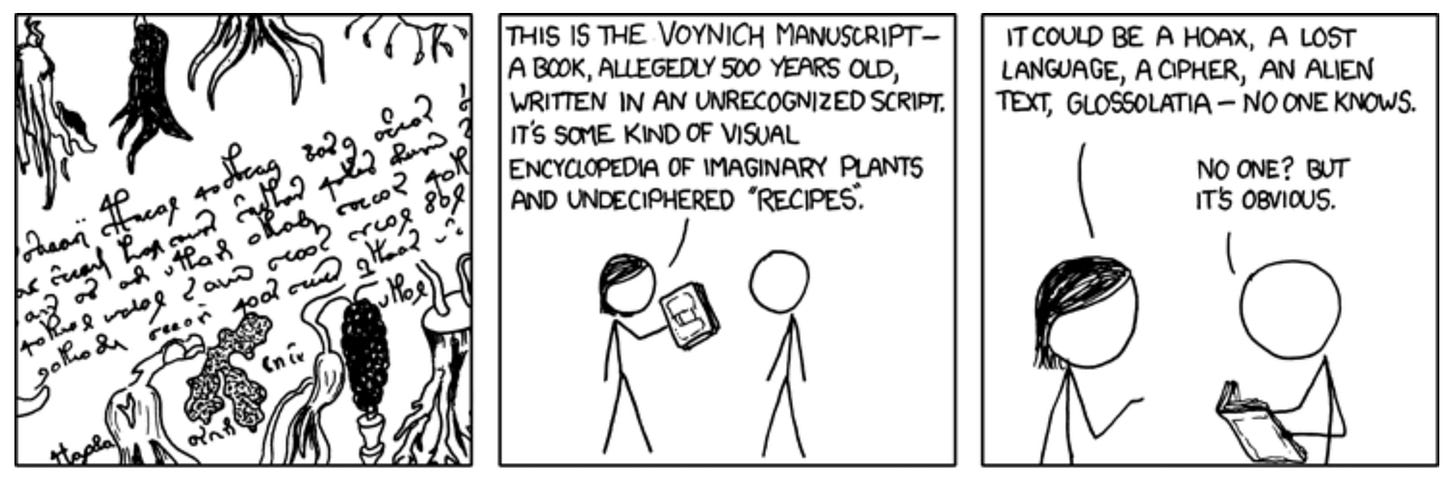

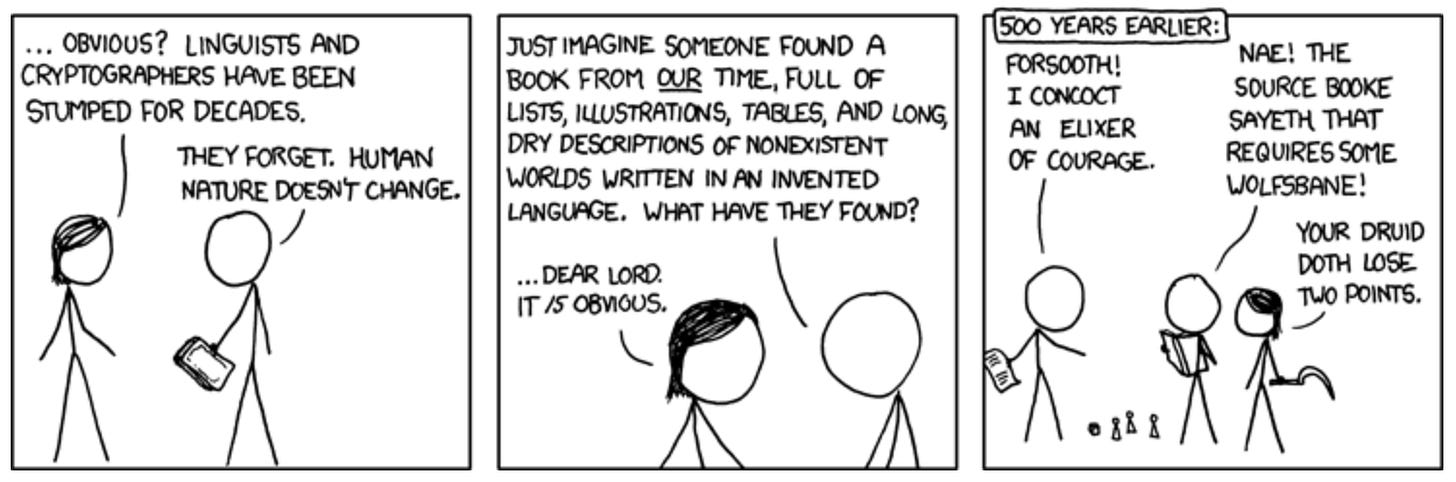

The mysterious manuscript

The Voynich manuscript is a medieval codex consisting of 240 pages hand-written in a unique script accompanied by beautiful colourful illustrations. It was carbon-dated to the early 15th century, and the author is unknown. So is the language. And so is the purpose. The manuscript changed owners many times, and the history of its physical presence would be worth a separate article, but I want to focus on a different aspect of this mystery: Voynich manuscript has been referred to as the world’s most important unsolved cypher.

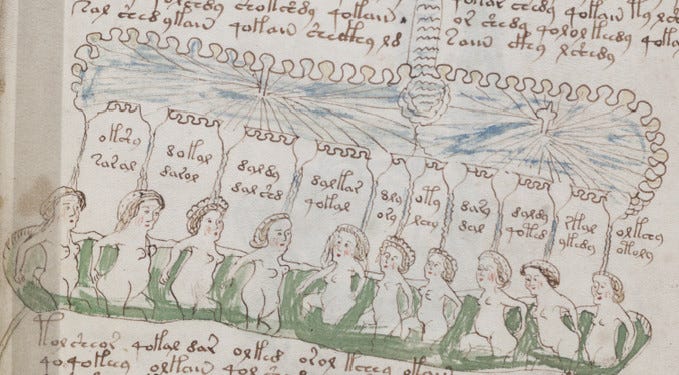

The only thing that hints at the manuscript's content are the illustrations, usually divided into six sections: Herbal, Cosmological, Astronomical (Astrological), Pharmaceutical, Recipes and my favourite, Balneological (containing bathing women looking really comfortable).

Solving an unknown cypher in an unknown language is one of the most challenging tasks in cryptography. The statistical analysis of the manuscript reveals that it’s not straightforwardly compatible with any of the natural, exotic or lost languages and monoalphabetic (simple) substitution cyphers. Yet the text is far from random and appears to have a solid morphological and syntactic structure.

The list of possible theories is overwhelmingly long and quite entertaining. Many cyphers were suggested: substitution and transposition cyphers, an abjad (a writing system in which vowels are not written), steganography and semi-random schemes. The options for the origin of the underlying 'Voynichese' are ancient Hebrew, Aztec’s Nahuatl, Manchu language, Middle High German and many more.

Regarding the purpose, it could be a pharmacopoeia, a Hygiene Manual or Alchemy textbook. It supposedly contains secrets given to Judas by Jesus Christ, Erich von Däniken links it to the Book of Enoch, and of course, there is the general air of aliens, every shape and kind. You can pay two grand for a seminar where you get to read the manuscript contents with your third eye. (My third eye failed the task miserably, and I was staring at those pages under the most altered circumstances.) Someone1 claimed to have been given the key to solving the manuscript by an alien prisoner held at Area 51. My favourite is undoubtedly the theory that the secret held by the Voynich Manuscript detailed the spontaneous creation of DNA through the use of sound.

And, of course, it can all be an elaborate medieval hoax.

Computational Linguistics

Now let’s get to a more technical part. I’ll give you a brief overview of the genealogy of machine translation algorithms and relevant NLP technologies (we’re talking natural language processing, not neurolinguistic programming ). Please feel free to just skim through the more mathematical bits. With a bit of context, we can dive into multiple papers on the Voynich Manuscript deciphering attempts later.

From Dictionaries to Babel Fish

The first known prototype of a translating machine was proposed in 1933 in Soviet Russia by Peter Troyanskii. His idea consisted of a typewriter, camera lens, and many mechanical switches to deliver the desired card with translated word into your view. Labelled as useless, the prototype was never finished and mechanical translation was put on hold. At the beginning of the Cold War, the automated translation of communist scheming became a very lucrative topic and the first real attempts at the mechanization of the task were undertaken by IBM. A successful translation of over 60 Russian sentences opened a flow of generous government dotations into the field of computational linguistcs.

Until the 90s, the basic paradigm remained the same - the languages need to be connected through a translation, or at least a good dictionary and a set of syntax rules, to find the translations. With the rise of computational power, IBM was able to process a large corpus of data to achieve quite reliable translation attempts and consider the larger context of the sentence through Statistical Machine Translation.

Finally, in 2016 Google announced their first deep learning language translator that’s most likely accessible to you right now through a right mouse click. (More on social problems with this in my previous article.) This neural model uses a completely different approach than any previous translation attempts.

Google dismembered the language and turned the words from semantic tokens into abstract mathematical representations - vectors. They can be seen as points in a multi-dimensional space (usually around ~100 dimensions). Every word has its placement in this space that implicitly encodes its semantic meaning and relation to all other words in the space. Working with such ‘embeddings’ allows previously unseen operations on the words.

woman + king = queen

This embedding space follows certain rules and geometry. For any two words, their distance, sum or difference can be calculated. Words with similar meanings are clustered close to each other. The word “Poodle” will be much closer to “Rottweiler” than to “Puddle”.

The most popular example in every NLP course involves encoding “king” and “woman” into two vectors. Then, you can sum these two words to see what’s their added meaning - the results will indicate a point in space that is very close to the word “queen”.

My mind was properly blown when I first found out about it. You can play with words in the most abstract sense, substracting meanings from each other building new ones. This is an incredible and very profound concept from a philosophical perspective as well. It indicates that between any two ‘concepts’ in the space, there is a continuous, infinite number of distinct meanings. Our language is like a few discrete cut out holes in the screen that shields us from this immense continuous ocean of essences.

Burn the Gazetteers

This change in the paradigm has one very important implication: if we are able to represent the meaning of a word implicitly, we don’t care what labels are assigned on the top. As a result, the embedding map will look similar for English, Hebrew, Chinese and Linear B, because the inherent structure of what we’re looking at is a human language.

This allows us to semantically map languages without the need of having any direct translation between them. And if it doesn’t blow your mind just yet, try and think about all the points in this space that carry profound meanings, yet we don’t have them mapped to any real ‘words’.

Linguistics Breakthrough with Machine Learning

The rise of deep models in the past years caused a great publishing fever in computational linguistics. A few neat mathematical tricks enabled the use of small datasets, which opened the playground for deciphering many lost languages where we have only very small samples available. And it did it very successfully! In 2019 the algorithm learned the Ugaritic almost perfectly and successfully decoded 67% of Linear B.2

The Voynich papers

Let’s tie it all together now. Some people in machine learning have already tried deciphering the manuscript with state-of-the-art algorithms. I reviewed a few of the available papers, so let’s take a look at how far are we from the final breakthrough.3

Let’s start with a paper exploring relatively simple algorithms. The authors were able to find out some evidence of morphosyntactic clustering, which implies that the manuscript is written in a language that has certain structural rules of word building. We already knew that.

The next thing they explored was the topic clustering. As we mentioned at the beginning of the article, the manuscript has sections with seemingly distinct topics - based on the pictures accompanying the text. These partitions seem to contain many specialized words that enable us to see various topic clusters - multiherbal section, astrology, the bath chapters all have their specific vocabulary. The paper provides a couple more insights into the morphology of words, but we can’t say it got any closer to decoding the text.

Another analysis published on this blog reveals insights from playing around with embeddings mentioned previously. The two images below show that the names of the stars from one of the astronomical illustrations are actually embedded really close to each other in the semantic space - but we don’t have enough data to be able to infer the meanings of these tokens.

The most hyped article that took the internet by storm (my internet at least) was 2017 “Decoding Anagrammed Texts Written in an Unknown Language and Script“. The paper first tried to guess the underlying language through different methods. The character frequency analysis suggests it to be Mazatec, a native American language from southern Mexico. Another two methods suggest ancient Hebrew, which is partially justified by the origin of the manuscript as well, so the paper further operates on this assumption.

As a next step, they mapped different glyphs to letters of ancient Hebrew, created anagrams (randomly shuffled letters of the word) and searched for possibly valid sentences. This step is very familiar to anyone who dabbled in Mystical Qabalah - I’m not surprised at all that they found *something*.

Supposedly, the first line is deciphered into Hebrew as:

(VAS92 9FAE AR APAM ZOE ZOR9 QOR92 9 FOR ZOE89)

ועשה לה הכהN איש אליו לביחו ו עלי אנשיו

This phrase is grammatically incorrect but roughly translates as:

“She made recommendations to the priest, man of the house and me and people.”

Hm.

… So what do you think? Is the secret spilt? Did you get those Real Truth™ shivers down your spine? Or did the monkey press random letters on the typewriter long enough to produce a sentence that looks like human language?

Other Voynich Resources

The longer I’m digging into this topic, the more I realize how vast the mystery is. Even if the manuscript is a hoax, or rather, a metalanguage artwork that aims at breaking your mind patterns, it is, undoubtedly, written in a language. It’s much harder than one would have thought to cheat all these algorithms with random sequences of symbols.

If you want to go down the Voynich rabbit hole, I recommend reading Nick Pelling’s Cipher Mysteries blog. He is a leading expert on the manuscript and provides detailed commentaries on all topics related.

In the Instagram link below, you can find a copy of a beautiful full scan book of Voynich edited by Raymond Clemens.

I promise to keep an eye on any new papers and keep you updated.

Do you have your own theory? Did you like the article? Let me know what you think!

this gentleman, if you’re into those Tier 8 conspiracy theories

note that despite the hype in most of the articles you find online, both of these languages are already fully translated by humans, so we’re not discovering anything new from a historical perspective.

This time I’ll refrain from claims as “machines can never crack THAT” - in loving memory of alphaGO 2016